SVIRO Dataset and Benchmark

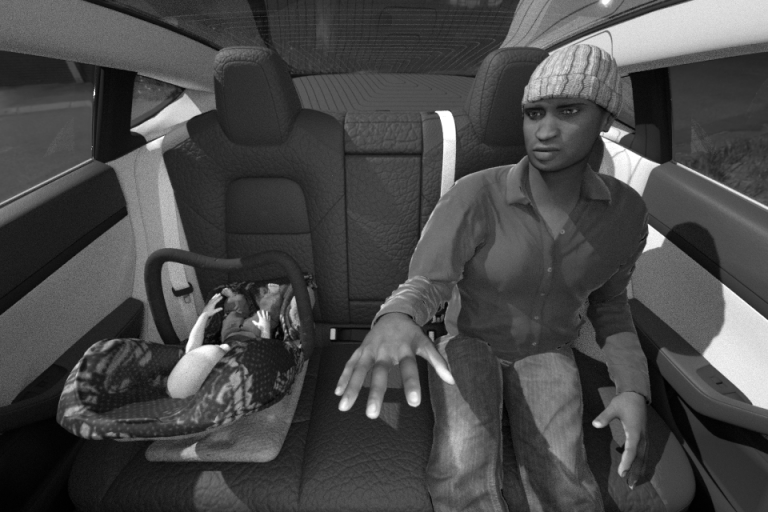

SVIRO is a Synthetic dataset for Vehicle Interior Rear seat Occupancy detection and classification. The dataset consists of 25.000 sceneries across ten different vehicles and we provide several simulated sensor inputs and ground truth data. SVIRO can be used to evaluate machine learning models for their generalization capacities and reliability when trained on a limited number of variations. Our goal was to provide a common benchmark to test approaches when resources are limited, as is common for engineering applications.

We offer a public leaderboard to compare different methods and models. Labels for the test data are now available as well.

We made sure to limit the amount of variations: We used identical backgrounds and textures for training, but used different ones for testing. Additionally, we used different human models, child and infant seats for each data split. Using this approach, one can test the generalization and robustness of machine learning models trained in one vehicle to a new one, for solving the same task. Consequently, the machine learning models need to generalize to previously unknown car interiors and unseen intra-class variations.

We provide RGB images, simulated infrared images and depth images. Our dataset contains bounding boxes for object detection, masks for semantic and instance segmentation and keypoints for pose estimation for each synthetic scenery, as well as images for each individual seat for classification.

SVIRO-Illumination

Based on SVIRO, we created images for three vehicle interiors. For each vehicle, we randomly generated 250 training and 250 test scenes where each scenery was rendered under 10 different illumination and environmental conditions. This dataset can be used to investigate the effect of changing illumination and environment on the classification accuracy and robustness of machine learning models.

SVIRO-NoCar

We placed humans, child and infant seats as if they would be sitting in a vehicle interior, but instead of a vehicle, the background was replaced by selecting randomly from a pool of available HDR images. This way we can generate input-target pairs where both images are of the same scene, but differ in the properties we want to become invariant to: the dominant background. We created 2938 training and 2981 test sceneries where each scenery is rendered with 10 different backgrounds. We used this dataset to show that it is possible to learn invariances on synthetic images and transfer them to real images.

SVIRO-Uncertainty

Particularly important for safety critical applications is the possibility to provide uncertainties together with the models’ predictions. It is paramount to somehow account for random events a deployed system could be encountered with. Hence, we created images for the vehicle interior which could be used to assess a model’s reliability. For each of the 3 seat positions in the vehicle interior rear bench the model should classify which object is occupying it, with empty being one possible choice. We created two training datasets using adult passengers only (4384 sceneries and 8 classes) and one using adults, child seats and infant seats (3515 samples and 64 classes). We created fine-grained test sets to asses the reliability on several difficulty levels.

SVIRO-InterCar

Similar to SVIRO-Illumination we generated each scenery several times by varying one key feature. While it was the illumination condition for SVIRO-Illumination, for SVIRO-InterCar we changed the entire vehicle interior. This means that not only the vehicle interior looks differently, but also the perspective is adapted accordingly. Since each vehicle has different windows, this also means that the illumination changes. We generated two versions: one with adult people only (1000 sceneries) and one with adults, infants in infant seats and children in child seats (990 sceneries). We used the 10 different vehicles for this dataset.

Citation

When using the SVIRO dataset in your research, please cite us using the following:

@INPROCEEDINGS{DiasDaCruz2020SVIRO,

author = {Steve {Dias Da Cruz} and Oliver Wasenm\"uller and Hans-Peter Beise and Thomas Stifter and Didier Stricker},

title = {SVIRO: Synthetic Vehicle Interior Rear Seat Occupancy Dataset and Benchmark},

booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV)},

year = {2020}

} When using the SVIRO-Illumination dataset in your research, please cite us using the following:

@INPROCEEDINGS{DiasDaCruz2021Illumination,

author = {Steve {Dias Da Cruz} and Bertram Taetz and Thomas Stifter and Didier Stricker},

title = {Illumination Normalization by Partially Impossible Encoder-Decoder Cost Function},

booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV)},

year = {2021}

} When using the SVIRO-Uncertainty dataset in your research, please cite us using the following:

@INPROCEEDINGS{DiasDaCruz2022Uncertainty,

author = {Steve {Dias Da Cruz} and Bertram Taetz and Thomas Stifter and Didier Stricker},

title = {Autoencoder Attractors for Uncertainty Estimation},

booktitle = {Proceedings of the IEEE International Conference on Pattern Recognition (ICPR)},

year = {2022}

} When using the SVIRO-NoCar dataset in your research, please cite us using the following:

@INPROCEEDINGS{DiasDaCruz2022Syn2real,

author = {Steve {Dias Da Cruz} and Bertram Taetz and Thomas Stifter and Didier Stricker},

title = {Autoencoder for Synthetic to Real Generalization: From Simple to More Complex Scenes},

booktitle = {Proceedings of the IEEE International Conference on Pattern Recognition (ICPR)},

year = {2022}

} License

This work, all the datasets and benchmarks are licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This means that:

- You must give appropriate credit,

- You may not use the material for commercial purposes,

- If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

Acknowledgement

Steve Dias Da Cruz is supported by the Luxembourg National Research Fund (FNR) under the grant number 13043281. This work was partially funded by the MECO project ”Artificial Intelligence for SafetyCritical Complex Systems” and the European Union’s Horizon 2020 Program in the project VIZTA (826600). We want to thank Michelle Gosha for the help during the data generation process.